Creating a website for your business is one thing and implementing multiple changes with the latest marketing trends to improve its ranking on the SERPs is another. With numerous SEO tools at your disposal, to analyze the performance of your SEO campaign you must consider using Google Search Console as it highlights the indexing issues that your website may come across during the crawling and indexing process. Google Search Console provides information on which pages on the website have been indexed and lists problems while Googlebot tries to crawl and index them. Each status report consists of different indexing issues. You can then zoom in to the details of these SEO reports and optimize them to build brand awareness, increase traffic on the website, improve conversion rate and much more.

What is Google Search Console?

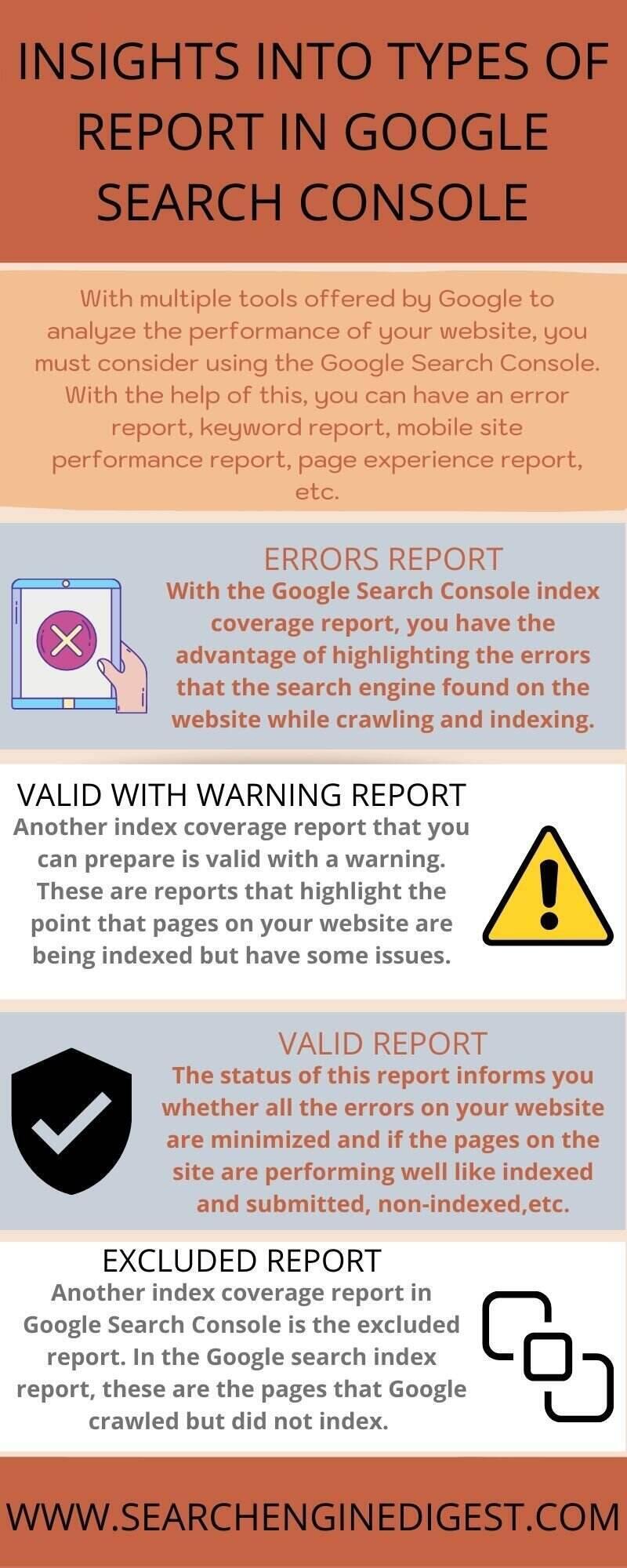

Google Search Console (formerly known as the webmaster tool) is a free tool by Google that helps marketers monitor and implement changes in their SEO plan & strategy to improve the ranking of their website. GSC was launched on May 20, 2015. With the help of this amazing tool, you can get an error report, keyword report, mobile site performance report, page experience report, etc. It is designed to know the indexing status of your website and make SEO -friendly implementations to fix technical SEO errors, submit XML sitemaps, analyze backlinks and much more.

Insights into types of report in Google Search Console

- Errors report – With the Google Search Console index coverage report, you have the advantage of highlighting the errors that the search engine found on the website while crawling and indexing. On figuring out any SEO error, the search engine will move on to crawl further sections on the website. This means the page will not be visible to the target audience and it may lower your website ranking, impact the performance of the SEO campaign, etc.

Google Search Console is a free tool that works on artificial intelligence. Thus, when Googlebot encounters any indexing issues with a particular page, the popular search engine will not perform a Google crawl. You perform an SEO audit to determine what are the SEO metrics that need to be optimized; similarly, with this Google Search Console index coverage report, you can determine what pages were crawled and indexed in Google crawl. It also highlights the number of pages that were leftover due to one SEO mistake or other. Some of the common SEO errors that you can determine with index coverage report from Google index site are listed below:

-

- 4xx and 5xx errors– The 4xx and 5xx errors are found on your website in the Google index site process. If a page returns a 4xx error, it means that the page once existed on the website and is longer present there or has moved to a new location. These 4xx errors are performed by the users. These are HTTP status codes that impact the performance of the SEO campaign. There are different ways in which search engines view and deal with pages displaying 4xx error codes.

In this index coverage report, another error that you need to focus on is 5xx error codes. The 5xx error means that everything was performed well before making the page live but the server failed to load the page. Google crawl helps to determine this error and you can then work on SEO plan & strategy so that the page loads appropriately when the server error is over. With a technical SEO audit, you can determine the changes that need to be implemented to load the page quickly when there is no server error. These changes may include keyword cannibalization, duplicate metadata, website is optimized for mobile devices, HTTP or HTTPS, SSL certificates, XML sitemap status, etc.

-

- Blocked by robots.txt – Another error that the Google crawl highlights is txt. All size businesses wish their content on the website to be in an organized manner to retain the visitors, build brand awareness, improve conversion rate, etc. The more organized content, the better are the chances of the user spending more time on the desired page. In SEO pagination, not all pages are essential to be displayed to the target audience and the pages that need to be hidden from the target audience are blocked using robots.txt.

In Google index site, when Google crawl is performed, it means that you submitted the page for indexing but the page was blocked by robots.txt The line of code in robots.txt informs the search engine that it is not allowed to crawl this page no matter that you have asked Google to index the page. However, if you wish the page to be indexed, you need to remove the robots.txt file. You also need to remove this from the URL path when the Google search index is crawling the website.

-

- Unauthorized request (401) – In the Google index site process, another error that you find is the unauthorized 401 error. This error indicates that the client’s request sent by the client could not be authenticated as it lacks valid certification for the requested source. As with most HTTP response codes in Google index websites that you come across when preparing index coverage report, the appearance of 401 error could prove to be challenging for your business.

The visitors when seeing these errors feel that the content on the website is not relevant and you may see visitors leaving the website. You may see your website ranking getting lowered for the relevant keywords or search terms entered. With the increase in social media marketing and business leveraging these online platforms to run PPC ads like Facebook Ads, LinkedIn Ads, YouTube Ads, etc., if the visitors do not find your website trustworthy, they may not refer this to their friends and family, which affects your peer-to-peer marketing strategy. As this 401 error is crucial to analyze, a handful of tips for diagnosing and debugging the error-free website are listed below.

-

-

- Check the requested URL.

- Clear relevant cookies & cache.

- Uninstall extensions, modules or plugins.

- Check for unexpected database changes.

-

-

- Redirect error – The next point that you need to focus on in the Google Search Console index coverage report is the redirect errors. The redirect errors take the visitors to a new page and these may be permanent or temporary errors. The 301 and 302 redirects are the ones that you need to focus on to increase visitors to the website, increase time spent on pages, etc.

A 301 redirect is a permanent redirect that takes the user on the webpage to a new location. The new location on the website can be to a particular section that is newly created or to any subsection. Another redirect is the 302 redirect. The 302 redirect means that the move is only temporary. As the move is temporary, it means that the page would reload as there are chances that the content on the website is getting updated, images on the website is being optimized, etc.

Be it any form of online marketing like PPC ads or search engine marketing techniques, to get in touch with the target audience, you need to focus on having user-generated content to keep the interest of the user engaged. Even when working on redirect errors, you clearly need to display the image alt text to the users so that the visitors clearly understand what changes are being performed on the website and if it is permanent or temporary.

The URL redirect error could be one of the following types like it was a redirect chain, it was a redirect loop, the redirect URL exceeded the maximum URL length and much more.

-

- Page not found (404) – The next error to focus on in the Google index site is the 404 error. The 404 error is the page not found error. These are pages that the server did not respond was not met. These errors on the website appear when the page has broken links, the content on the category page has been moved permanently, the website theme is automatically creating pages that should not exist, etc.

To keep the interest of the user engaged and increase users’ trust in your website, you should consider redirecting the pages to a new location or completely adding new content. You should also focus on the fact that if you remove a page from your website, you should not forget to remove it from the sitemap to avoid this error. With a regular SEO audit, you can figure out what pages are existing on the website, focus on writing unique content, etc.

-

- Marked ‘noindex’ – The next error report from the index coverage report includes the marked ‘noindex’ error. This Google Search Console error shows you submitted the page for indexing to the search engine but got a result that the page cannot be indexed. You must consider removing the metatag or HTTP response to remove this error. You need to check the page’s source code and look forward to the ‘noindex’. To resolve this indexing issue, you can check in your CMS and look forward to modifying the page’s code directly. Some points that you can focus on which may be causing the ‘noindex’ errors are listed below.

-

-

- Check the URL path.

- Make sure search engines can index your page and website.

- Ensure that your page is not password protected.

- Check if the page is a members only page.

- You need to check the URL inspection tool.

- Check the last crawled date.

-

-

- Crawl issue – The crawling issue in the Google index site is another point that you need to focus on. When you submit the page for indexing and you see Google crawl encountered an error, you can work on debugging the page using the URL inspection tool. It may have happened that the content on the website could be very large and the search engine could not fully download the page. So, you need to clearly divide your webpage into different sections and subsections so that the server load time is reduced. To solve the indexing issue and make Google crawl your page, you can consider some of the following points discussed below.

-

-

- Improve the page loading speed.

- Improve URL errors (if any).

- Identify and fix broken internal links.

- Pages with denied access.

- Wrong pages in the sitemap.

- Page duplicates.

- Wrong javascript and CSS usage.

-

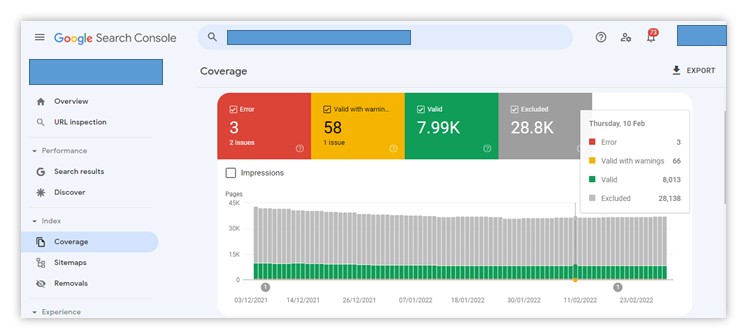

- Valid with warning report – Another index coverage report that you can prepare is valid with a warning. These are reports that highlight the point that pages on your website are being indexed but have some issues. The Google search index shows the number of pages that are being indexed in the Google crawl process but has some issues like the page does not appear for the relevant keywords, duplicate URLs are present for a particular page, etc.

The pages are valid in this report. You may see that the pages have XML sitemap but are blocked by robots.txt. These warnings are not as severe as SEO errors you see but still cannot ignore them as they may affect the number of impressions share, click-through rate, local visibility, etc. In the Google search index, the content listed on the website should be easily crawlable to rank higher on the SERPs.

You should be very sure about which pages on your website you want to block. It allows the Google search index crawler to go through your site and find that they have an XML sitemap but are blocked by robots.txt. You can consider blocking the page at the domain level or on a page-to-page basis. Even if you find the website appearing in the search results, you may see rich snippets were less optimal as the search engine could not view the title tag, meta description, page content, etc. The robots.txt file disallows the pages for crawling and blocks the page from appearing on the search result pages. If the search engine crawlers can’t access the particular URL path, then the noindex directive appears. Google crawl after seeing the noindex directive will drop the pages from the index.

- Valid report – Another index coverage report that you can prepare with Google Search Console is the valid report. The status of this report informs you whether all the errors on your website are minimized and if the pages on the site are performing well. When Google search index crawls the page, they identify that the pages that need to be indexed are properly working, all sitemaps are executed in a suitable manner, etc.

Just as you conduct a PPC audit to determine the performance of the PPC campaign, similarly, with this report, you can see the website health status, quality backlink, etc. Some of the points that you can figure out with this report are listed below.

-

- Submitted and indexed – With this Google search index, you get to know that the submitted URL for indexing was appropriately indexed. These indexed pages appear very well for the keywords or search terms entered for the product or services related to your business.

- Indexed and not submitted in sitemap – In the Google index website, the next point that you get to know about indexing is that the URL was discovered by Google and indexed. Although the page was indexed but it was not easy for Google to perform this step. Be it Google SEO or Bing SEO, any search engine wants the content to be indexed to be clearly informed to them. So, you must consider defining the page content that you want to be indexed and including them in a sitemap. This way, the Google crawl is performed quickly, which leads to increased traffic on the website, better website ranking, etc.

- Indexed and marked as canonical – When the Google crawl is completed and the pages on the website are adequately indexed, you should consider highlighting the pages that are duplicates. As they have duplicate URLs, you should consider emphasizing the link that has duplicate content.

Just as you perform a/b testing to determine which version drives more traffic to the landing page and yields a better return on investment. In the same way, a canonical tag is used. A canonical tag is a line in HTML that tells search engines which version of the URL the search engine should prioritize and consolidate all signals to that version. You can consider adding canonical tags for these pages to inform the search engines of which pages are duplicates. However, you need to focus on having unique content so that the page does not dilute its authority from external links. With SEO myths regarding external linking putting it in a bad light, not all of them are valid and one of them includes backlink analysis. You should be very sure about your marketing strategy and focus on getting links from authoritative websites and not black hat websites. The links from trustable websites increase the users’ trust, helps in building brand awareness on social media platforms, etc.

- Excluded report – Another index coverage report in Google Search Console is the excluded report. In the Google search index report, these are the pages that Google crawled but did not index. These pages were not indexed as you deliberately chose them not to index. When you indicate the search engine to exclude the pages, you need to be very sure about which pages on the website you need to exclude and not list the significant pages relevant for the target audience. As minor ad fatigue in pay-per-click advertising could drain your advertising budget; similarly, an SEO campaign may see visitors leaving the website if they do not find the page they are actually looking for. Some of the pages that you may inform Google not to index are:

-

- Pages blocked by ‘no index’ tag – For the pages that you do not wish to be indexed, the ‘noindex’ directive will inform the search engine not to index them. Although with the marketing trends, there are e-commerce trends, SEO trends, etc., so if you wish these pages to be indexed, you can remove this ‘noindex’ tag.

- Pages blocked by robots.txt – These are the pages that are blocked by the robots.txt file. This is done to prevent the visitors from viewing any private or unimportant pages.

- Pages blocked by page removal tool – These are the pages that are blocked by the URL removal request. As this is a temporary blockage so you should consider deleting the page or making it return a 404 error, or else Google would choose to index it again.

- Pages blocked due to unauthorized request – These are the pages that were blocked by Googlebot and require an authentication request. You should avoid the mistake of linking the page on a staging website as you may forget to remove the page blockage and Google would not be able to index it. To avoid this error, you can outsource SEO report services to experts that have mastery in SEO analytics, video SEO, etc.

- Duplicate non-HTML page – You may have HTML pages on your website that you do not wish to be indexed by Google. So, in the process of website optimization, you can consider preventing these pages from being crawled and indexed by Google. You can identify the pages on your website that have the same information and consider adding canonical tags to avoid duplication. When you inform Google not to index the duplicate page, it will not perform the indexing process.

- Pages with redirects – You may be updating the content on your website, optimizing images, etc. So, in these cases, you can deliberately inform Google search index not to perform any indexing on this website. You should consider updating links to the relevant redirects so that the users well understand about the ongoing changes.

Benefits of Google Search Console

- Free and easy tool – GSC is a free tool provided by Google. It is an easy-to-use tool and you can analyze the important SEO metrics that need to be optimized. With the help of this tool, you can analyze the status of your SEO website.

As you perform bulk edits in the Google Ads Editor before making the ad campaign go live to make the most of your ad budget, in the same way, you can use this tool to prepare a report on some of the crucial metrics. As you link Google Ads with Google Analytics to see how the ad campaign is performing similarly, you can link GSC with other Google accounts to prepare an SEO report to improve the performance of your website. You can conduct keyword research to choose high-traffic relevant keywords, perform backlink analysis and much more. This would help you solve any indexing issue that you face when Google crawl is being performed.

- Increases organic traffic – With the help of GSC, you can get to have the index coverage report. After making changes to the metrics of this report, you can see increased traffic organic traffic to your website. This GSC tool has an entire section dedicated to search traffic. With the help of this tool, you get to have clear insights into the audience activity. You can prepare an attribution report to clearly understand where the users come to your website, like social media platforms, affiliate marketing websites, etc. So, with the help of GSC, you can see an increase in organic traffic and with great content on the website, excellent product page descriptions, etc., make them take intended action on the web page.

- Website performance report – With the help of GSC, you can have a detailed performance report about the website. The website performance report helps to have a clear insight into the target audience. You can identify the buyer persona and then optimize your website accordingly so that the next time when they reach your website, they do have to not navigate through the different sections on the website and reach the desired page easily. You can also work on audience segmentation to make the relevant audience locate the relevant page.

You can consider preparing a keyword performance report that informs you about the pages that your website is ranking for. As you work on bidding strategy to improve your ad rank, have PPC plans to enhance the keyword quality score, etc.; similarly, you can work on an SEO plan & strategy to improve the keyword performance and also optimize keywords that are not having a better rank.

- Optimize content with search analytics – With the help of GSC, you can have details on search analytics and then take the necessary steps to optimize the content on the website. Content is never going to lose its value. Content marketing during global pandemic, Facebook changing to META, etc., the content will always have an important role to play. With the help of these analytics, you can work on writing great content, how-to content, etc., so that the users are engaged with your website. You need to focus on writing content that resonates with the users’ intent so that when the Google index website process is done, the reports display a positive review of your SEO efforts and the indexing issue is minimized.

- Analyze your profile link – With the help of GSC, you get to have a detail on the link that your website receives. The Google index website helps to have clear analytics data about your website’s links. As you wish users to reach your website from online platforms so that you can work on strategic marketing to achieve the desired results. The links to your website can be from various platforms. You need to track these visualizations to improve tracking for specific phrases. You must ensure that the links to your website come from trustworthy sites and not black hat websites.

Closing thoughts

Now that you have got an idea of the index coverage report, you must be willing to get started with it. When the Google index website process is performed, you get to know the SEO errors present on the different pages. As you do not prepare email marketing list daily, write content on the website daily, etc., in the same way, you do not need to make these index coverage reports daily. After preparing this report, you know what points to work on to fix these issues. After getting answers to questions like ‘are my page indexed’, whether Google is able to crawl my web pages or not, you can start making improvements on your website. You can use robots.txt to help improve crawling efficiency, remove URLs not of use, etc. As Google keeps updating its tool, we may get to see reports on the performance of more metrics. You can get in touch with a digital marketing company with expertise in competition analysis, market research, reputation management, web development, etc. and get the index coverage report with experts.

References

-

306, 2024Understanding Google’s preference for crawling high-quality content

Crawling websites is [...]

-

2905, 2024All you should know about third-party cookies by Google

Cookies have revolutionized [...]

-

2405, 2024Helpful content update by Google to enhance website ranking

Google launched a [...]

Leave A Comment