Crawling websites is a dynamic process intricately tied to their content’s quality and relevance. Content, often referred to as the king, holds significant sway over crawling activities. Google’s search results emphasize the importance of high-quality, user-centric content in driving increased crawling demand. In your SEO plan & strategy, you must thoroughly analyze content before Google crawls. In this blog, we will explore critical SEO facets essential for enhancing website visibility. We will also discuss the concept of crawl budget, which defines the allocation of resources for promptly indexing pages. Moreover, emphasis will be placed on the pivotal role of high-quality content in attracting both users and search engine crawlers.

Understanding user search demand helps create great content that is aligned with user intent, thus increasing relevance and search ranking. Prioritizing user experience, including factors like site speed and mobile-friendliness, enhances both user satisfaction & search engine performance. By comprehensively addressing these aspects, the blog aims to provide readers with insights to anticipate crawling challenges and ensure sustained website success.

What is crawling?

Crawling is the process in which web crawlers or spiders consistently discover content on a website. It includes everything that is on a website, like text, images, videos, and any other type of file that is accessible to the bots. Irrespective of the format, content is exclusively found through links.

Importance of crawling

Before a search engine can index a website, it needs to crawl it. Crawling involves navigating through web pages, following links, and discovering new content. For website owners and marketers, understanding what influences this crawling process can significantly impact their search engine rankings.

What is high-quality content?

It’s no surprise that if you want your website to crawl more, you need to create high-quality content. Every search engine has designed its algorithm in such a way that it will automatically prioritize the relevancy, authority, and value of the content. In simple words, every search engine prioritizes high-quality content. By any chance, if the search engine finds any flaw in your content, they will start looking for another website with better content quality than your website.

Before you delve into the sea of content making for your website, you have to find out how to create good content, how to lure users to your website, how to make sure the user spends more and more time on your website, how to make your website good enough to be at the top of search engine result pages (SERPs), the most important thing how to make the search engines crawl your website.

So, if you want the answers to the questions above, you are at the right place. In this blog, you will get the answer to all of your questions regarding website crawling and how high-quality content can help your website get crawled more by search engines.

Factors that search engines consider when crawling a website

- Crawl budget:- Crawl budget is a topic that has been preoccupied by a vast number of SEO experts for years. The myth about crawl budgets that have been circulating in the digital marketing industry for years is that they limit the number of times a bot visits your website, which impacts the indexing and ranking of your website negatively. But, in recent times, a revelation has emerged: in the majority of cases, the better your content quality is, the higher it has a chance to be indexed. This new aspect dismisses the misconception of the crawl budget and directs everyone’s attention back to developing high-quality content that both the audience and search engines find beneficial.

-

- Contradicting the crawl budget myth

For a long time, the definition of crawl budgets was that search engines provide a specific amount of visits to every website. If you waste all of your cash and effort on too many pages, people won’t view your fresh material. Because of this misunderstanding, many website owners started considering crawl efficiency optimization over content quality.

-

- Google prioritizes crawls depending on demand and quality

Google crawlers always prioritize crawls depending on demand and quality; it’s evident because the crawlers are smart bots, not some dense droids. Well-maintained websites with good content will automatically receive more crawls than those with thin and antiquated content.

- Focus on URL structure:- The job of a web crawler is to discover the URLs and download the content that is on the website. During this entire process, they may deliver the content to the search engine index and extract links to other web pages.

There is a category in which the extracted links fall into:

-

- New URLs: Links that are not familiar to the search engine.

- Known URLs that provide nothing to the search engine: These URLs will be reviewed occasionally to determine whether any changes have been made to the page’s content.

- Known URLs that have been modified: It means that the URLs have been recrawled and reindexed, such as via an XML sitemap last mod date time stamp.

- Known URLs that have not been modified: These URLs will not be recrawled or reindexed.

- Check page indexability:- An important aspect of crawling is indexing. In simple terms, indexing is storing and organizing the information found on a website. The bot sent by search engines renders the code on the site in the way a browser does. It registers all the content, links, and metadata on a website. Indexing demands a considerable amount of computer resources, and it’s not just storage. It takes a considerable number of computing resources to render millions of websites.

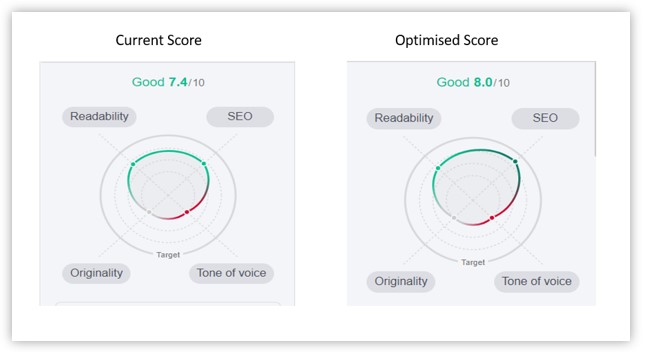

- Quality of content:- So, what in particular do the search engines consider as high-quality content? Considering that there is no universal answer, there are some fundamental tenets that hold some significance:

-

- Client-oriented: Are users getting what they’re looking for from your website? Is your content solving a user’s query? Your content should be enlightening, appealing, and beautifully laid out.

- Proficiency and Authority: Is your content displaying a deep knowledge of the topic that users are looking for? Are you quoting reputable and trustworthy sources and offering anything unique to the user? Search engines value the content made by authorized sources.

- Dependability: Can a user depend on the precision and integrity of your content? Search engines pay a great amount of attention to websites with a solid reputation when it comes to dependability.

- Freshness of content: Are you regularly updating your content with new information and visions? Your commitment to keep your content fresh and relevant is something that search engines rate very high.

- Prioritize quality over quantity:- It’s not something new; everyone knows that the quality of a thing holds more weight than its quantity. It can not only increase crawl frequency but also:

-

- Improvement in search ranking: The algorithms of search engines prefer websites with consistently valuable information, resulting in growth in search engine rankings.

-

- Enhance user interaction: Content of top quality not only keeps users engaged but also keeps users coming back for more, a great contribution to user metrics that search engines take into consideration.

- Build authority of your website: With time, a stature for remarkable content sets out your website as an authorized source, leading to a dominant presence of your website on the internet.

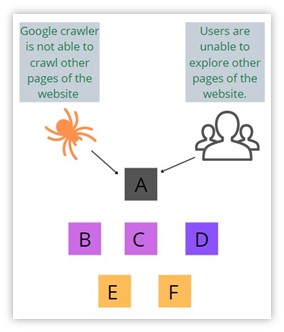

- Website structure and navigation:- The structure and navigation of a website are crucial in how effectively search engines can crawl and index its content.

-

- Internal linking: One crucial aspect that search engines evaluate is a website’s internal linking structure. Sites with clear, logical navigation and well-connected pages are more likely to be thoroughly crawled. For example, consider a blog where each post links to related articles, creating a network of interconnected content that is easier for search engines to discover and index.

-

- Sitemap: Providing a sitemap can assist search engines in discovering and indexing content efficiently. A sitemap acts as a guide of your website, listing all the important pages and their relationships. By providing the sitemap of your website to search engines, you are ensuring that all your important pages are crawled and indexed promptly.

- Site performance :- A website’s performance directly impacts its crawlability and user experience.

-

- Page speed: Search engines prefer faster-loading websites as they enhance user experience. Use tools like Google PageSpeed Insights to optimize your website’s loading times by compressing images, minifying CSS and JavaScript, and leveraging browser caching. Improved page speed not only benefits user engagement but also encourages search engine crawlers to explore more of your site efficiently.

- Mobile-friendliness: With the growth in mobile search, having a flexible design that works well on all devices is crucial. Make sure your website is mobile-friendly by using flexible design principles and testing across various devices and screen sizes. Mobile-friendly websites are more likely to be crawled and indexed by mobile-first engines like Google.

- Technical considerations:- Technical aspects of a website can influence how search engines crawl and interpret its content.

-

- Robots.txt: Properly configured robots.txt files help guide search engine crawlers on which parts of the site to crawl or avoid. Use robots.txt directives to block irrelevant or sensitive content from being indexed, such as admin pages, internal scripts, or duplicate content. However, be cautious not to inadvertently block crucial pages that you want search engines to crawl.

- URL Structure: Clean and descriptive Uniform Resource Locators (URLs) can make search engines understand your website better. Use concise, keyword-rich URLs that accurately reflect the content of each page. Avoid long, complex URLs with unnecessary parameters or session IDs, as they can confuse search engine crawlers and impact indexing efficiency.

- Backlink profile:- Inbound links from other websites act as a strong signal of a site’s authority and relevance.

-

- Inbound links: Quality backlinks from reputable sites are like assurance for search engines that a website is authoritative and trustworthy. Focus on acquiring high-quality backlinks through content marketing, guest blogging, and relationship building with your industry. Quality backlinks not only improve your site’s crawlability but also play an important role in the higher ranking of your web page in search engine result pages (SERPs).

- Website security:- Website security is increasingly important for search engines and user trust.

-

- HTTPS: Websites using secure hypertext transfer protocol (HTTPS) connections are favored by search engines over HTTP sites. Ensure your website safety with a Secure Socket Layer (SSL) certification to encrypt data transmitted between users and your server. HTTPS websites are not only more secure but can also enhance your website ranking in search engine algorithms, making them more likely to be crawled and indexed.

- User experience signals:- User experience metrics can indirectly influence how search engines prioritize crawling and indexing.

-

- Bounce rate: A high bounce rate can act as a signal to search engines that users are not finding your website content engaging or relevant. Reduce bounce rates by improving content quality, enhancing page usability, and optimizing calls-to-action to keep visitors engaged and explore more pages.

- Dwell Time: The amount of time users spend on a website can be a positive ranking signal. Create engaging, informative content that can make users stay on your site longer. Use multimedia such as videos, infographics, and interactive features to enhance user experience and prolong dwell time.

- Social media activity:- Social media activity can indirectly influence search engine crawl rates and indexing frequency.

-

- Social sharing: Content that is shared and discussed on social media platforms may receive more attention from search engines. Encourage social sharing by integrating social sharing buttons on your website and constantly promoting your content on social media channels. Increased social engagement can lead to more frequent crawling and indexing of your website by search engines.

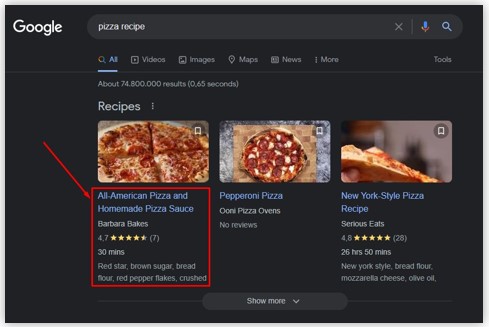

- Structured data:- Implementing structured data markup can enhance how search engines comprehend and display your content in search results.

-

- Schema Markup: Use schema.org markup to give search engines a comprehensive amount of information about your content, such as product reviews, event details, recipe instructions, and more. By implementing structured data, you can boost the visibility and relevance of your content in search engine results, potentially increasing crawl rates for structured data-enhanced pages.

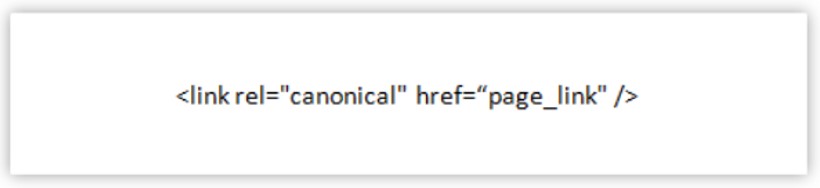

- Canonical tags:- Canonical tags help search engines identify the superior version of duplicate or similar content.

-

- Canonicalization: Use canonical tags to indicate the source or preferred URL for content that exists in multiple locations on your website. This way the search engines can avoid indexing duplicate pages and strengthen ranking signals to the canonical version, optimizing crawl efficiency and indexing accuracy.

- Content accessibility:- Ensure that your content is accessible to both users and search engines.

-

- Accessibility standards: Follow web accessibility guidelines to make your content accessible to users with disabilities. Search engines prioritize user-friendly websites, so improving accessibility can indirectly enhance crawlability and indexing by improving overall user experience.

- Content engagement metrics:- Analyzing user engagement metrics can provide important insights into content performance and crawl priorities.

-

- Analytics integration: Use tools like Google Analytics to keep a eye on user engagement metrics like time on page, scroll depth, and conversion rates. Identify high-performing content that attracts user engagement and prioritize it for frequent crawling and indexing by search engines.

- Link equity distribution:- Optimize the distribution of link equity across your website to ensure that important pages receive sufficient ranking signals.

-

- Internal linking strategy: Develop a strategic internal linking strategy to distribute link equity from high-authority pages to important target pages. By funneling link equity effectively, you can enhance the crawlability and visibility of critical content within your website architecture.

- Dynamic rendering:- Dynamic rendering techniques optimize website content delivery for search engine crawlers.

-

- JavaScript SEO: Implement dynamic rendering to serve pre-rendered HTML content to search engine crawlers, ensuring comprehensive indexing of JavaScript-heavy websites. There are different tools that you can use to facilitate efficient dynamic rendering for improved crawlability.

- XML Sitemaps:- Optimizing XML sitemap aids search engines in discovering and indexing important website content.

-

- Sitemap optimization: Regularly update and optimize XML sitemaps to include all critical URLs, prioritize important pages, and submit them to search engines via Google Search Console. XML sitemaps gives search engines a roadmap to crawl and index essential content efficiently.

- Crawlability testing:- Conducting regular crawlability tests identifies and resolves technical issues that hinder website indexing.

-

- Crawler Simulation Tools: Use tools like Screaming Frog SEO Spider to simulate search engine crawlers and identify crawlability issues such as broken links, redirect chains, and orphaned pages. By addressing technical errors, you can improve crawl efficiency and maximize indexing potential.

Conclusion

In conclusion, understanding the complex processes of crawling and indexing is highly significant for anyone involved in SEO or website optimization. You need to focus on crucial factors that influence crawling, such as crawl budget, high-quality content, and user experience. Contrary to the misconception surrounding crawl budgets limiting crawls, emphasis is now placed on content quality, relevance, and user satisfaction.

Creating high-quality content remains a cornerstone of successful crawling and indexing strategies. Search engines prioritize content that is client-oriented, authoritative, reliable, and regularly updated. Quality content not only enhances crawl frequency but also improves search rankings, user engagement, and website authority over time.

Additionally, technical aspects like site structure, performance, security, and accessibility play vital roles in facilitating efficient crawling and indexing. Internal linking, sitemaps, schema markup, canonical tags, and dynamic rendering techniques further optimize crawlability and enhance content visibility in search results.

Regular monitoring of analytics, conducting crawlability tests, and adapting to evolving search engine algorithms are essential for maintaining optimal crawling performance. By prioritizing quality content creation, technical optimization, and user-centric design, website owners can ensure that their sites are crawled efficiently and indexed accurately, ultimately improving their visibility and search engine rankings.

References:

-

306, 2024Understanding Google’s preference for crawling high-quality content

Crawling websites is [...]

-

2905, 2024All you should know about third-party cookies by Google

Cookies have revolutionized [...]

-

2405, 2024Helpful content update by Google to enhance website ranking

Google launched a [...]

Leave A Comment